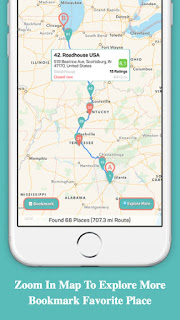

Near Road (iPhone App)

https://itunes.apple.com/us/app/near-road/id1041798311?mt=8

Search Restaurant, Gas Station, Shop, Hotel, Bar ... Near Your Road Trip

Unlike Point-Based Search of Yelp and Foursquare App, Near Road is Line-Based Search.

Main Features:

1. Retrieve place information from Yelp or Foursquare, such as ratings, reviews, distance, address, phone number, hours ...

2. Serve with 2 map data sources: Apple Maps and Google Maps.

3. Autocomplete addresses and searches.

4. Zoom in map to explore more places near your route.

5. Bookmark favorite places.

6. Share place information via Message, Email ...

7. Choose multi-transportation types: driving, bicycling and walking (Advanced Option).

8. Search places by limiting the lowest rating (Advanced Option).

9. Search places by limiting search radius (Advanced Option).

Near Road can work almost anywhere in the world. It is absolutely your useful travel companion.

Intelligent Visual World

Computer Vision & Image Processing & Machine Learning & Pattern Recognition

Wednesday, December 16, 2015

Monday, January 19, 2015

You're at the Breaking Point

Today my old friend, Dan, gave me a book for reading. I just glanced it over. Then I was quickly attracted by the following paragraph:

"...

If you need new employment, set aside time after work each day to seek it. During the workday, sharpen your skills that make you a better candidate for the next position. Keep your cool and stay professional. Don't burn any bridges or make hasty decisions, because it is easiest to get a new job when you still have one. Deal with your stress with exercise and time with trusted friends.

..."

-------------- Rising Above A Toxic Workplace

Thursday, January 15, 2015

Analysis of Locality Sensitive Hashing

Idea:

Locality

Sensitive Hashing (LSH) is one of the popular indexing algorithms, which can be

used for image indexing of image retrieval. The key idea of LSH is to hash the

points using several hash functions so as to ensure that, for each function,

the probability of the collision is much higher for objects which are close to

each other than for those which are far apart. Then, one can determine near

neighbors by hashing the query point and retrieving elements stored in buckets

containing that point.

Definition:

In order to solve an R-NN problem, given a point set S and distance metric

function d, locality sensitive hashing function h can be described as follows:

H =

{h: S -> U}, H is a family of hashing functions, for any v, q in S,

If v

in B(q, r1), then Pr[h(q)=h(v)] >= p1;

If v

not in B(q, r2), then Pr[h(q)=h(v)] <= p2.

Here,

B(q, r) = {v in S | d(v, q) <= r}, Pr is probability, p1 > p2, r1 <

r2.

Given

parameters (r1, r2, p1, p2), we can amplify the gap between the “high”

probability p1 and “low” probability p2 by concatenating several functions. Define

a function family G = {g: S -> Uk} such that g(v) = (h1(v), …, hk(v)), where

hi in H. For an integer L, we choose L functions g1, …, gL from G,

independently and uniformly at random. During preprocessing, we store each v in

the bucket gi(v), for I = 1, …, L. Since the total number of buckets may be

large, we retain the non-empty buckets by resorting to hashing.

To

process a query q, we search all buckets g1(q), …, gL(q); as it is possible

(though unlikely) that the total number of points stored in those buckets is

large, we interrupt search after finding first 3L points (including

duplicates). Let v1, …, vt be the points encountered therein. For each vj, if vj

in B(q, r2), then we return YES and vj, else we return NO.

Problems:

- How can we select k and L? If k is large, the collision probability should be reduced and the calculation time is also large; If k is small, it’s hard to distinguish the points which are far apart. If k has already be fixed, it is easy to find the optimal value of L which will guarantee that the fraction of false negatives are no more than a user specified threshold.

- How can we save memory? Generally, given any bucket, we should store k-dimensional hash value and a pointer to the data (e.g., image) for each point. Therefore, it will require L*(k+1) words of memory totally. We can reduce the memory requirement by not storing the hash value explicitly as concatenation of k projections, but instead hash these k values in turn to get a single word (index) for the hash, which should be L*2.

Wednesday, January 14, 2015

Content Based Image Retrieval

Content-based Image

Retrieval (CBIR) is

to use one or more image features to build an image indexing structure. Then

given a query image, we can quickly retrieve a list of similar product images.

1. Image features: We can adopt four kinds of image feature: color-based (e.g., Histogram), texture-based (e.g., Local Binary Pattern), shape-based, and mixed (e.g., Joint Composite Descriptor). The more efficient and independent features we adopt, the higer retrieving accuracy we obtain. However, if we adopt too many features, especially high-dimensional features, we should spend more time in getting the retrieval result.

2. Image indexing: Efficient image indexing algorithms (e.g., Locality-Sensitive Hashing) can dramatically decrease the retrieval time.

Deep Learning Reference

Introduction

Deep

learning is a set of algorithms in machine learning that attempt to

model high-level abstractions in data by using architectures composed of

multiple non-linear transformations.

Deep

learning is part of a broader family of machine learning methods based

on learning representations. An observation (e.g., an image) can be

represented in many ways (e.g., a vector of pixels), but some

representations make it easier to learn tasks of interest (e.g., is this

the image of a human face?) from examples, and research in this area

attempts to define what makes better representations and how to create

models to learn these representations.

Various deep

learning architectures such as Deep Neural Networks, Convolutional Deep

Neural Networks, and Deep Belief Networks have been applied to fields

like computer vision, automatic speech recognition, natural language

processing, and music/audio signal recognition where they have been

shown to produce state-of-the-art results on various tasks.

Literature

Deep Machine Learning – A New Frontier in Artificial Intelligence Research – Itamar Arel, Derek C. Rose, and Thomas P. Karnowski.

Info: Briefly introduce some popular deep learning algorithms, such as Convolutional Neural Networks and Deep Belief Networks ...

Gradient-Based Learning Applied to Document Recognition - Yann Lecun , Léon Bottou , Yoshua Bengio , Patrick Haffner

Info: Present traditional Convolutional Neural Networks ...

Notes on Convolutional Neural Networks - Jake Bouvrie

Info: Discuss the derivation and implementation of Convolutional Neural Networks, followed by a few straightforward extensions ...

Flexible, High Performance Convolutional Neural Networks for Image Classification - Dan C. Ciresan, Ueli Meier, Jonathan Masci, Luca Maria Gambardella, Jürgen Schmidhuber

Info: Present a fast, fully parameterizable GPU implementation of Convolutional Neural Network variants ...

Tiled Convolutional Neural Networks - Quoc V. Le, Jiquan Ngiam, Zhenghao Chen, Daniel Chia, Pang Wei Koh, Andrew Y. Ng

Info: Present Tiled Convolutional Neural Networks ...

ImageNet Classification with Deep Convolutional Neural Networks - Krizhevsky, A., Sutskever, I. and Hinton, G. E.

Info: Provide some improvements on traditional Convolutional Neural Networks for large image database training ...

Improving Neural Networks by Preventing Co-adaptation of Feature Detectors - G. E. Hinton, N. Srivastava, A. Krizhevsky, I. Sutskever and R. R. Salakhutdinov

Info: Describe Dropout for deep learning ...

Maxout Networks - Ian J. Goodfellow, David Warde-Farley, Mehdi Mirza, Aaron Courville, Yoshua Bengio

Info: Describe Maxout for deep learning ...

Regularization of Neural Network using DropConnect - Li Wan, Matthew Zeiler, Sixin Zhang, Yann LeCun, Rob Fergus

Info: Describe DropConnect for deep learning ...

Convolutional Networks and Applications in Vision - Yann Lecun , Koray Kavukcuoglu , Clément Farabet

Info: Describe unsupervised Convolutional Neural Networks ...

Document

Deep Learning Document

Theano Document

Pylearn2 Document

Developing Environment

1. Anaconda (Python + Numpy + Scipy + Matplotlib + ...)Download: http://continuum.io/downloads

2. Theano

Download: https://github.com/Theano/Theano

3. Pylearn2

Download: https://github.com/lisa-lab/pylearn2

Architecture

1. Convolutional Neural Networks

2. Deep Belief Networks

Public Database

1. The CIFAR-10 datasetDownload: http://www.cs.toronto.edu/~kriz/cifar.html

2. The CIFAR-100 dataset

Download: http://www.cs.toronto.edu/~kriz/cifar.html

3. NORB Object Recognition Dataset

Download: http://www.cs.nyu.edu/~ylclab/data/norb-v1.0/

Understanding of Convolutional Neural Networks

Convolutional Neural Networks (CNNs) is one of the popular deep learning algorithms. CNNs were proposed as a deep learning framework that is motivated by minimal data preprocessing requirements. It can also be regarded as a feature extraction process.

CNNs is a family of multi-layer neural networks. It is comprised of one or more convolutional layers (often with a subsampling layer) and then followed by one or more fully connected layers as in standard multilayer neural network.

The architecture of a CNNs is designed to take advantage of the 2D structure of an input image. This is achieved with local connections and tied weights followed by some form of pooling which results in translation invariant features. Another benefit of CNNs is that they are easier to train and have many fewer parameters than fully connected networks with the same number of hidden units because of weight sharing strategy.

Given an input RGB image (M x N), the first convolutional layer has k filters (or weight matrix) of size m x n x q. Here, q equals 3 (the number of input maps: RGB); k is the number of output maps. Actually, these filters can be represented as a 4D tensor with size k x q x m x n. The size of output map should be (M-m+1) x (N-n+1) because of convolution. If the next layer is subsampling layer, each map is then subsampled typically with mean or max pooling over p x p contiguous regions. Either before or after the subsampling layer, an additive bias and sigmoid (or tanh) nonlinearity is applied to each feature map. The outputs of hyperbolic tangent function will typically be near zero, the outputs of a sigmoid will be non-zero on average. However, normalizing your training data to have mean 0 and variance 1 along the features can often improve convergence during gradient descent.

Tuesday, January 13, 2015

Hadoop Guide for Beginners

Post:

A Beginners Guide to Hadoop

URL: http://blog.matthewrathbone.com/2013/04/17/what-is-hadoop.html

System Design:

A Beginners Guide to Hadoop

URL: http://blog.matthewrathbone.com/2013/04/17/what-is-hadoop.html

System Design:

Google File System http://research.google.

Google Bigtable http://research.

Google MapReduce http://research.

Subscribe to:

Comments (Atom)